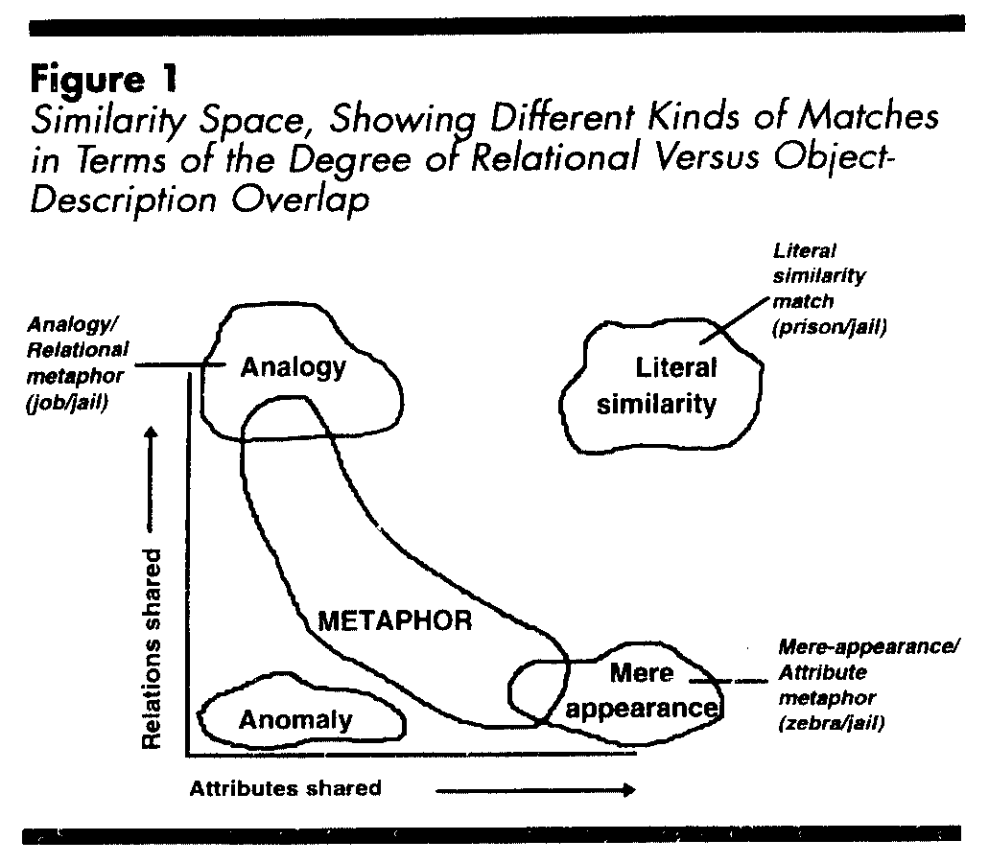

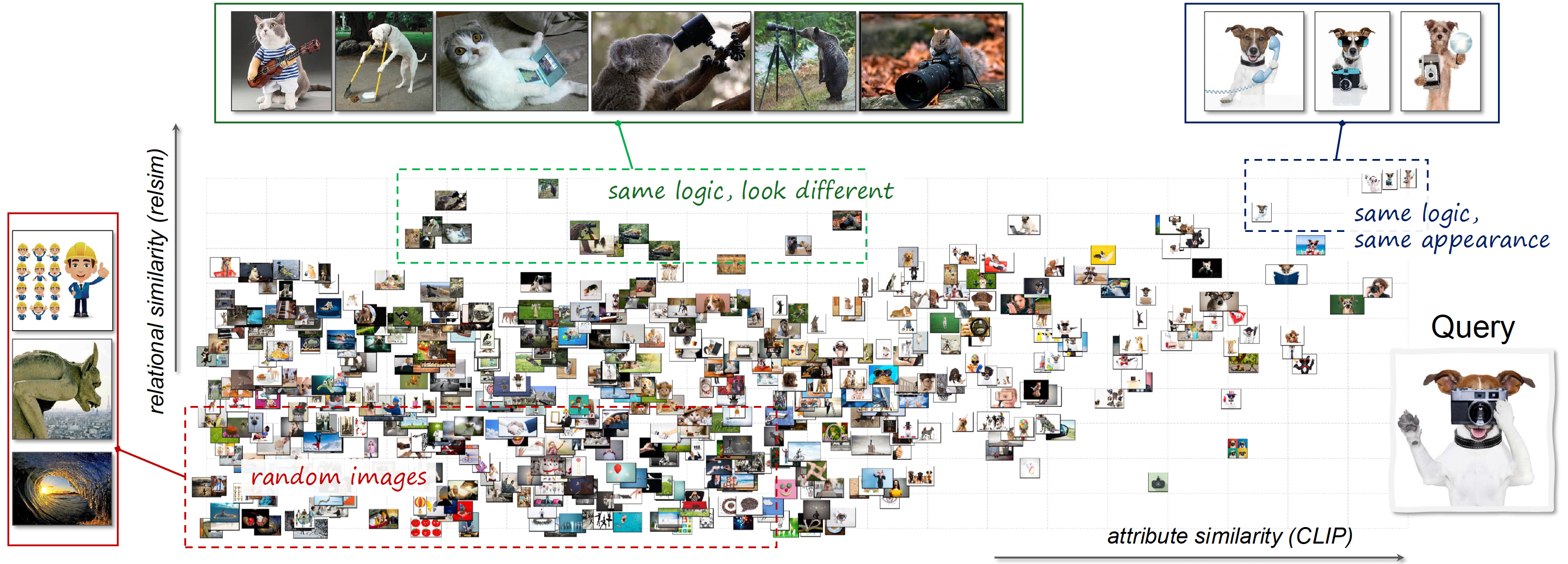

Humans do not just see attribute similarity---we also see relational similarity.

This ability to perceive and recognize relational similarity, is arguable by cognitive scientist to be what distinguishes humans from other species.

An apple is like a peach because both are reddish fruit, but the Earth is also like a peach: its crust, mantle, and core correspond to the peach's skin, flesh, and pit.

[reveal all] [hide all]

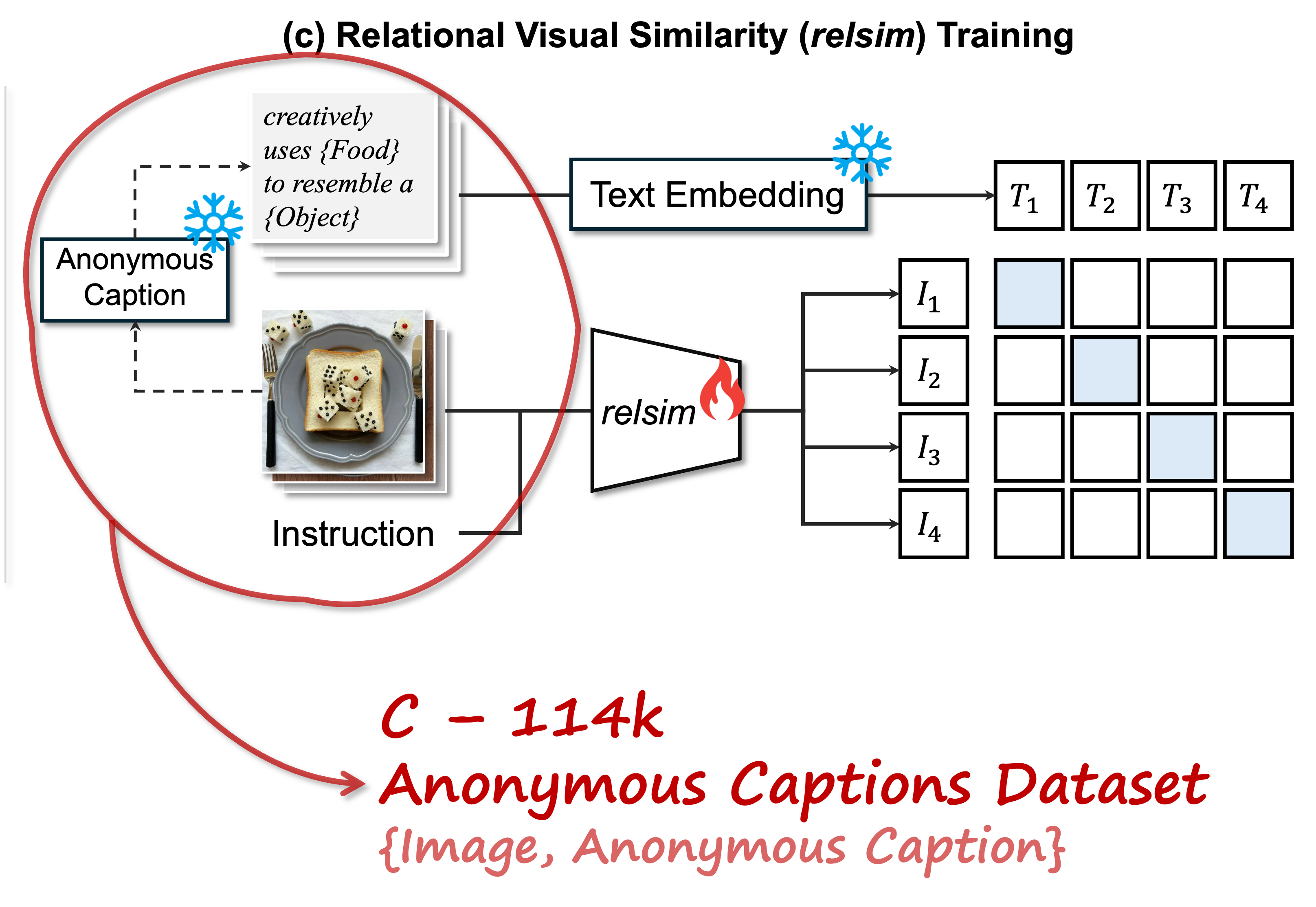

How can we go beyond the visible content of an image to capture its relational properties?

How can we bring images with the same relational logic closer together in representation space?

To answer this question,

pip install relsimfrom relsim.relsim_score import relsim

from PIL import Image

model, preprocess = relsim(

pretrained=True,

checkpoint_dir="thaoshibe/relsim-qwenvl25-lora")

img1 = preprocess(Image.open("image_path_1"))

img2 = preprocess(Image.open("image_path_2"))

similarity = model(img1, img2)

print(f"relsim score: {similarity:.3f}")

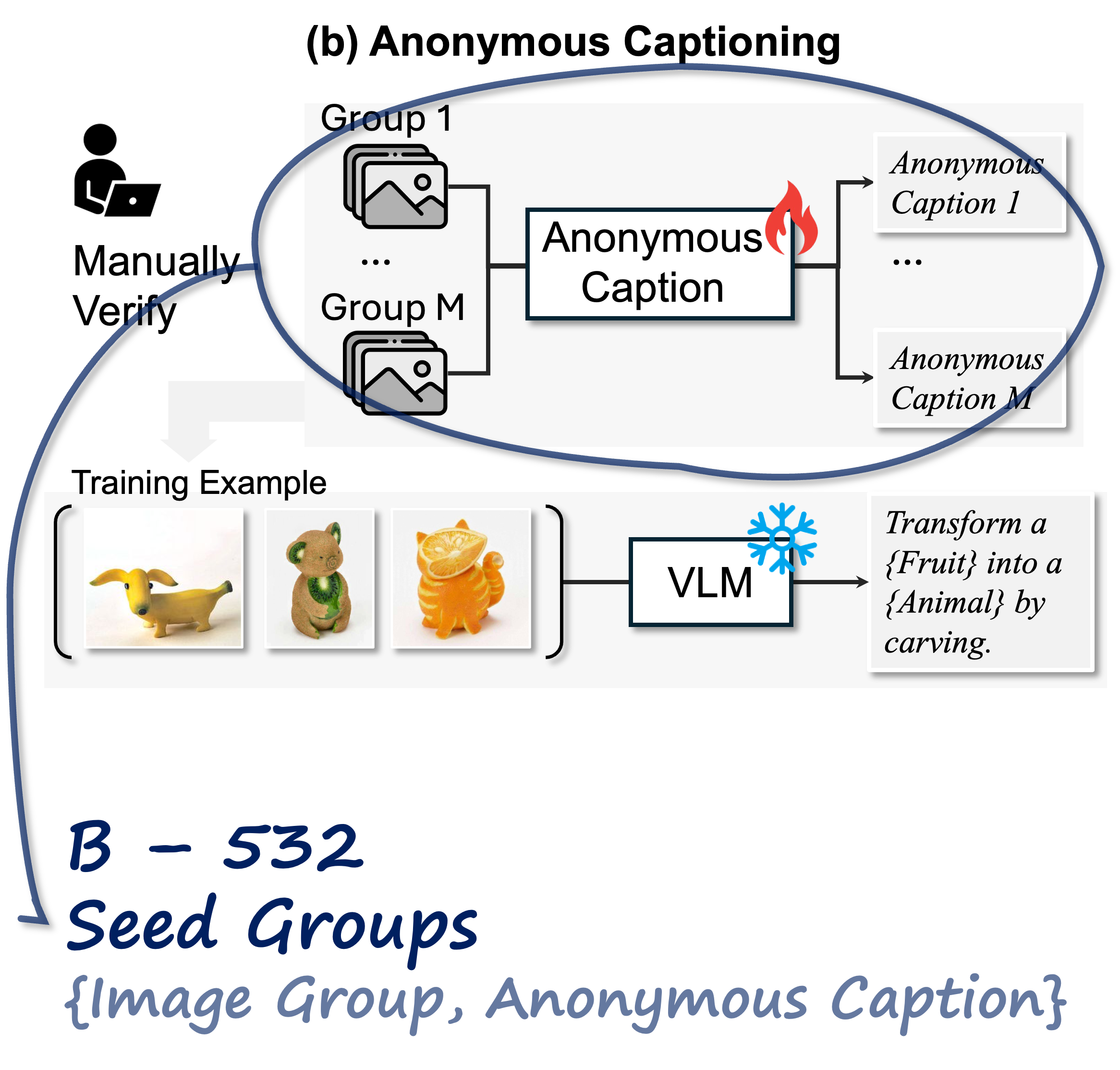

This is a data viewer for the datasets used in the paper. Please click on the image to view the corresponding dataset. You can also see the dataset on HuggingFace.

|

Seed Groups (Live View)

|

Anonymous Captions (Live View)

|

Figure cropped from the original paper.

You've reached the end.

This website template is adopted from visii (NeurIPS 2023) and DreamFusion (ICLR 2023), source code can be found here and here.

You are more than welcome to use this website's source code for your own project, just add credit back to here.

Thank you!

(.❛ ᴗ ❛.).