|

|||||||||||||||

|

|||||||||||||||

TL;DR: A framework for inverting visual prompts into editing instructions for text-to-image diffusion models.

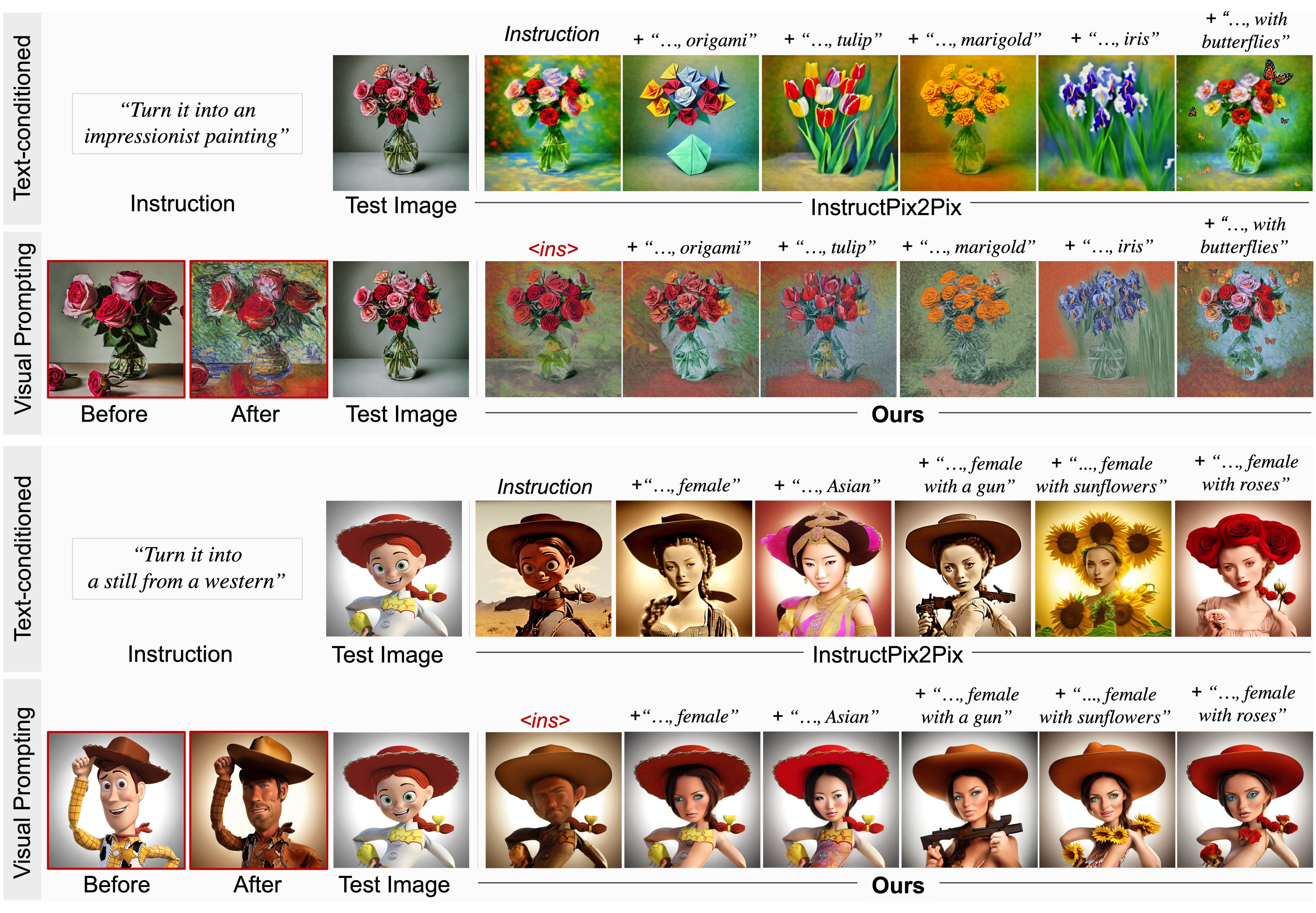

Text-conditioned image editing has emerged as a powerful tool for editing images. However, in many situations, language can be ambiguous and ineffective in describing specific image edits. When faced with such challenges, visual prompts can be a more informative and intuitive way to convey ideas.

We present a method for image editing via visual prompting.

Given pairs of example that represent the "before" and "after" images of an edit, our goal is to learn a text-based editing direction that can be used to perform the same edit on new images.

We leverage the rich, pretrained editing capabilities of text-to-image diffusion models by inverting visual prompts into editing instructions.

Our results show that with just one example pair, we can achieve competitive results compared to state-of-the-art text-conditioned image editing frameworks.

| Prior Work: Text-conditioned image editing | 📍 Ours: Visual Prompting Image Editing | |||||

| Text Prompt | Test Image | Output | Visual Prompt: Before → After | Test Image | Output | |

| "Make it a drawing" |  |

|

→ → |

|

|

|

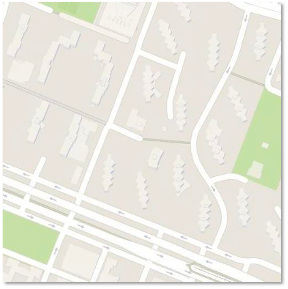

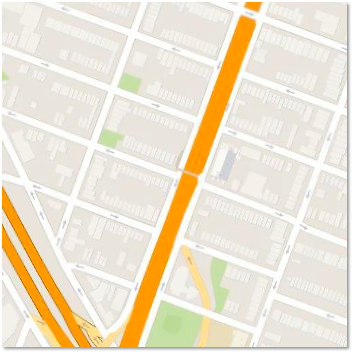

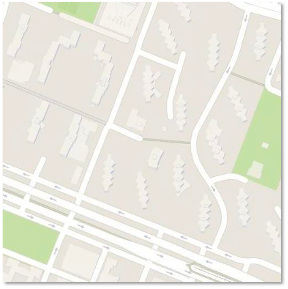

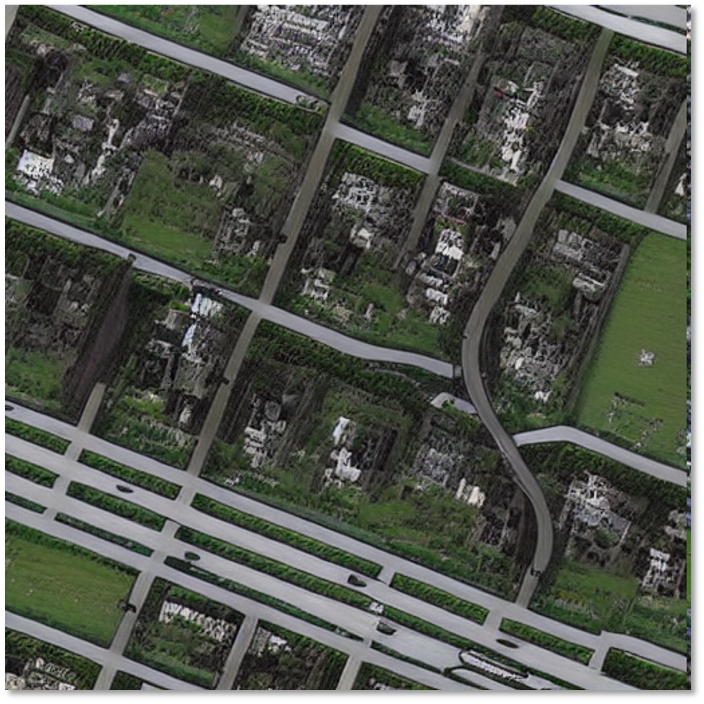

| "Turn it into an aerial photo" |  |

|

→ → |

|

|

|

| Text-conditioned scheme (Prior work): Model takes an input image and a text prompt to perform the desired edit. |

Visual prompting scheme (Ours): Given a pair of before-after images of an edit, our goal is to learn an implicit text-based editing instruction, and then apply it to new images. | |||||

Before:  |

After:  |

🧚 Inspired by this reddit, we tested Visii + InstructPix2Pix with Starbucks and Gandour logos. | ||||

Test:  |

<ins> + "Wonder Woman"  |

<ins> + "Scarlet Witch"  |

<ins> + "Daenerys Targaryen"  |

<ins> + "Neytiri in Avatar" |

<ins> + "She-Hulk"  |

<ins> + "Maleficent"  |

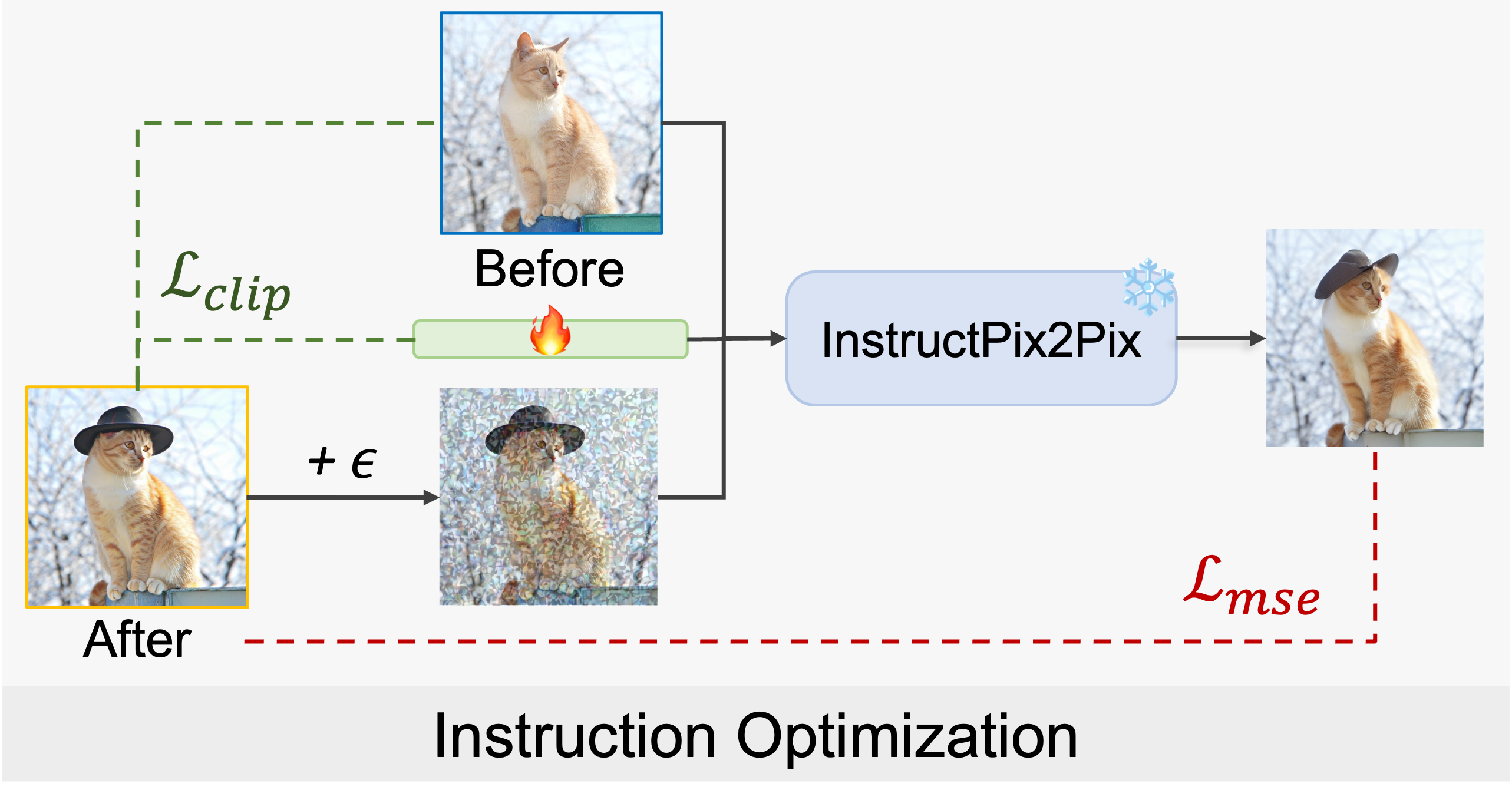

Given an example before-and-after image pair, we optimize the latent text instruction that converts the “before” image to the “after” image using a frozen image editing diffusion model, e.g., InstructPix2Pix.

|

|

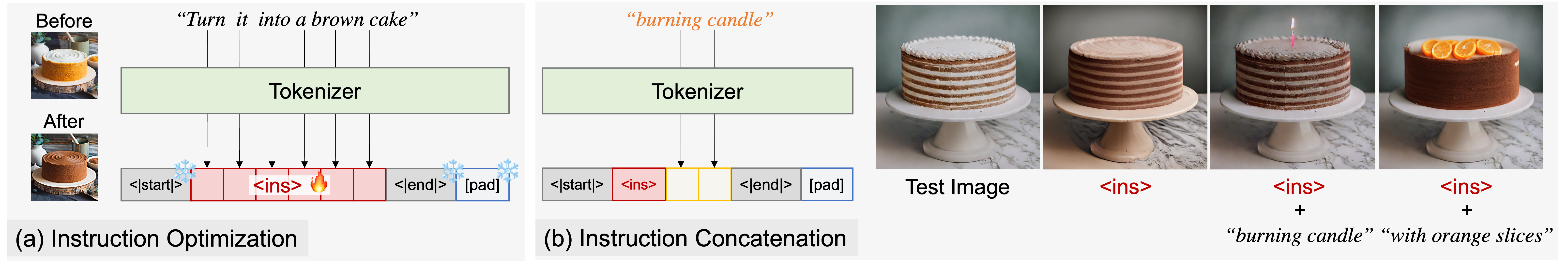

| Instruction Optimization: We optimize instruction embedding ‹ins›. | Instruction Concatenation: During test time, we can add extra information into the learned instruction ‹ins› to further guide the edit. |

We can concatenate extra information into the learned instruction ‹ins› to navigate the edit. This allows us to achieve more fine-grained control over the resulting images.

We can concatenate extra information into the learned instruction ‹ins› to navigate the edit. This allows us to achieve more fine-grained control over the resulting images.